Facebook Proves Filters Aren't Only for Cigarettes

Facebook VP Nick Clegg believes his platform isn't addictive, taking a page from the Big Tobacco playbook.

The Sukha

Save time with Sukha

The Filter Bubble

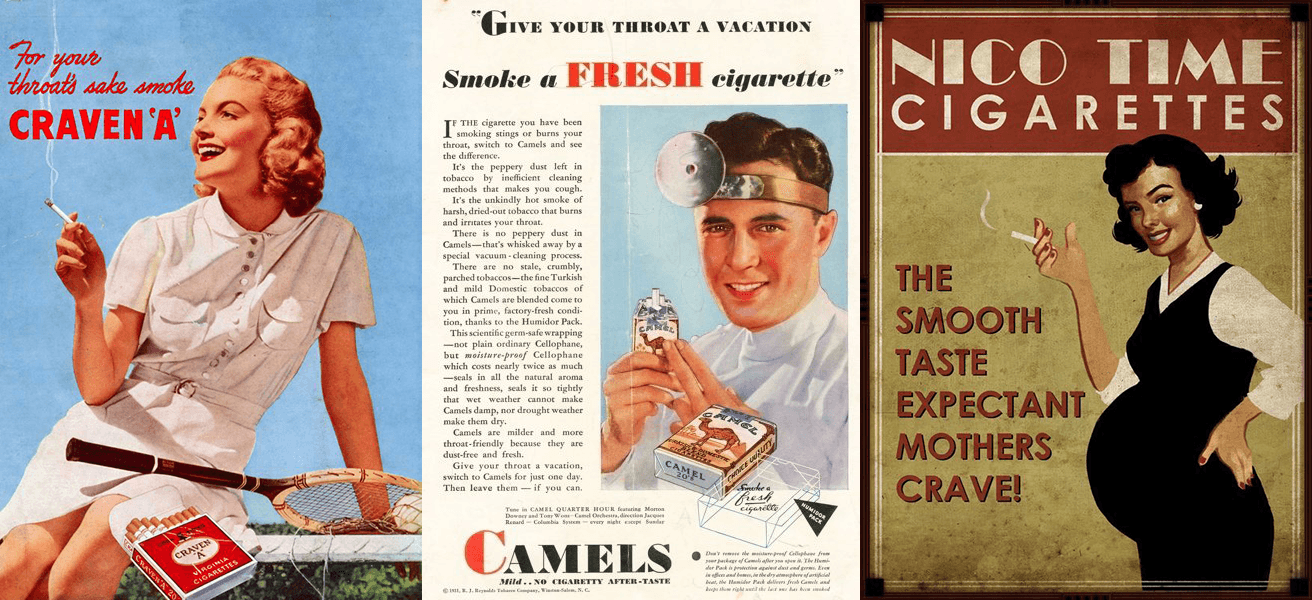

In the mid-20th century, tobacco companies sold the health and lifestyle benefits of smoking their tobacco, even going so far as to co-opt family physicians into the marketing campaigns. Later—still disingenuously claiming their products were safe—they began putting filters into cigarettes. The move was intended to reduce the harm caused by cigarette smoke, though in actuality filters did nothing except become a defensive marketing talking point for the tobacco lobby.

It's interesting this week to hear Nick Clegg reiterate his corporate talking points while introducing the idea that Facebook will now introduce a filter in its core product: the Newsfeed. It's hard not to draw a parallel here.

If your product is harmless, why put a filter between your users and your product?

Now Nick's corporate rhetoric may be about filters in the Newsfeed as a solution to help social media addiction, but let's remember that Facebook and its subsidiary Instagram have a years-long history with filters and the algorithms that control them.

Content Filters…and Photo Filters, too

As with the tobacco industry, Facebook filters allow users to skew reality. These aspirational tools let you nip, tuck, and clean whatever features you might not want to view in the mirror—so much so that teenagers are getting expensive plastic surgeries in order to look better on Instagram.

As Jia Tolentino deftly writes in The New Yorker about the aspirational filter face,

It’s a young face, of course, with poreless skin and plump, high cheekbones. It has catlike eyes and long, cartoonish lashes; it has a small, neat nose and full, lush lips. It looks at you coyly but blankly, as if its owner has taken half a Klonopin and is considering asking you for a private-jet ride to Coachella.

Tobacco companies created filters to push back against growing concerns over the damage being caused by their product. They let smokers believe they weren't actually destroying their bodies.

Facebook's filters don't attack your lungs, though they're equally disorienting. They're extensions of the Facebook ethos: keep optimizing their algorithms to keep you addicted to their product so you spend more time on their platforms.

Keeping You Hooked

For the “social media isn’t addictive” crowd, Nick Clegg has a vested interest in drawing your eyes away from Facebook’s ability to steal hours of your life. He's attempting to create a filter over the reality of his platform.

As VP of Global Affairs for the platform (and a former politician), he’s paid—and paid well—to tell you that you’re smarter than those fools that believe social media is addictive. He's a brilliant orator and Zuck was smart to hire him to promote these lies for a paycheck.

In a recent Medium post, he criticizes anyone who dares make such accusations, writing

In each of these dystopian depictions, people are portrayed as powerless victims, robbed of their free will. Humans have become the playthings of manipulative algorithmic systems. But is this really true? Have the machines really taken over?

As expected, the rest of the post goes on about personal responsibility and agency—what shows up in your newsfeed is the result of your connections, your friends, the groups you join—placing the onus of addiction on the user, not the product.

You can just not smoke, you know.

Sound familiar?

Sadly, reality filtered through Clegg's lens obscures the truth: the product Facebook engineers design intentionally hijacks our brains. Watch any of Chamath's videos about the rigorous optimizations and A/B tests to ensure maximum time on site/time in app.

The filter is the noise Facebook apologists flood social media with in order to disguise their true intentions: to maximize the time you spend on their platforms in order to sell your data to advertisers.

As with Big Tobacco, no matter how many filters these apologists use, the toxins still seep through.